Chatbot in the Kill Chain: The AI Bubble Driving Trump’s Kleptocracy

The multi-trillion-dollar boondoggle threatening the economy, the military, and our collective sanity.

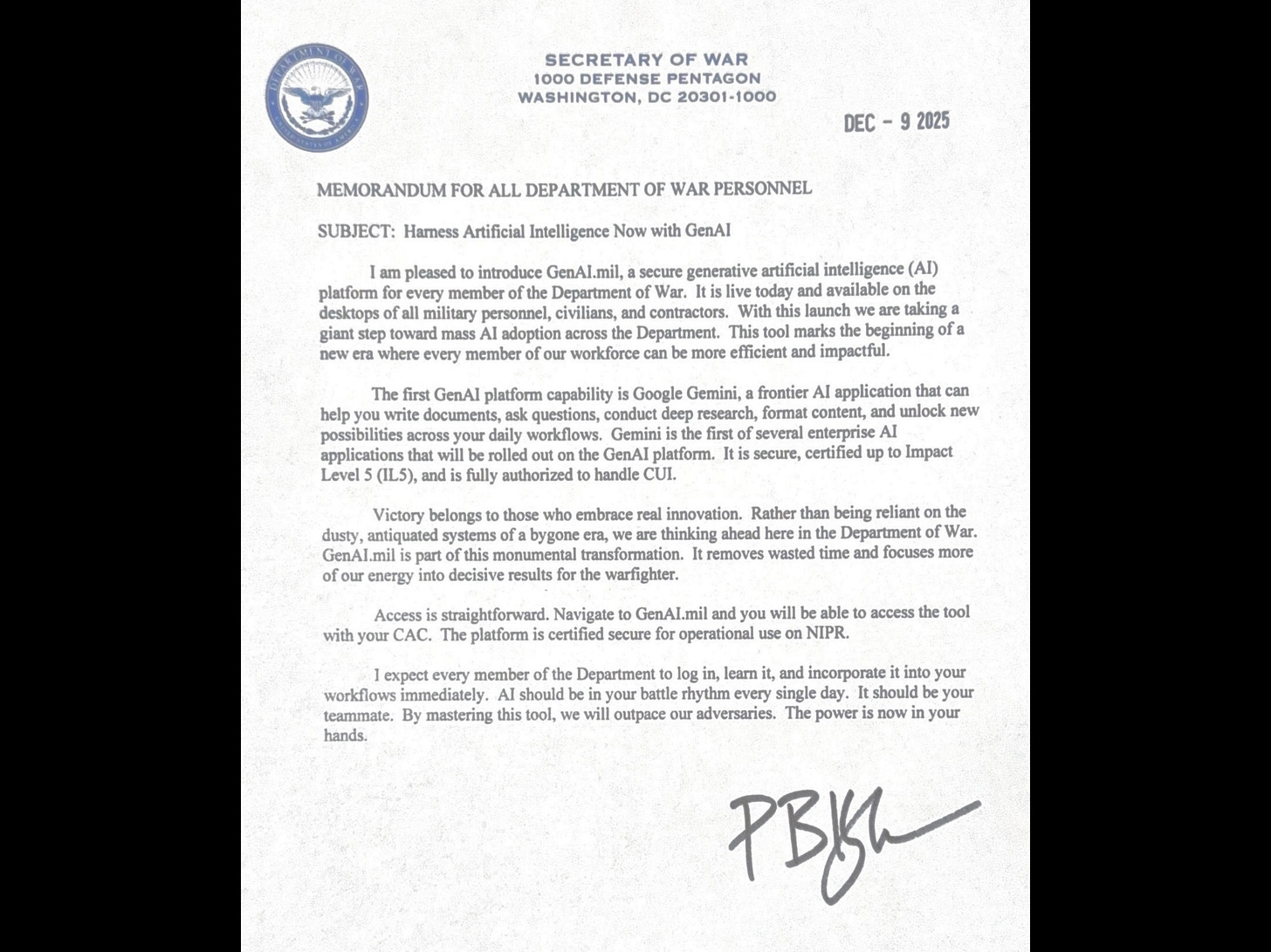

“I expect every member of the Department to log in, learn it, and incorporate it into your workflows immediately. Al should be in your battle rhythm every single day. It should be your teammate.”

—“Secretary of War” Pete Hegseth

A leaked memo from the Pentagon mandating that artificial intelligence be incorporated into every soldier’s daily “battle rhythm” as a “teammate” is a dangerous symptom of an American government whose sole purpose is to enrich itself and its backers—regardless of the consequences.

Understanding why Pete Hegseth would make such a radical pronouncement—and why it’s just the tip of an existential iceberg—requires looking at several components:

Who is the “Department of War” CTO Emil Michael?

LLMs: why we’re betting the farm on a technological dead end

The data center gold rush: a solution searching for—and creating—problems

State of the economy: how AI infrastructure is the only thing keeping stocks afloat

Trump’s role: keeping the AI bubble inflated at all costs; Trump’s AI EO

It all adds up to the Trump regime betting America’s future on empty techno-fascist promises. It is accelerationism gone wild—and a boondoggle measured in the trillions of dollars.

Full disclosure: I’ve worked on projects that included AI for 30 years. I’m no Luddite. But the accelerationist movement that seized the technology sector, and now the government, is out of control, amoral, and fundamentally wrong on how it’s developing AI.

Emil Michael, Tech Bro

The “Under Secretary of War for Research & Engineering and Chief Technology Officer of the Department of War,” Emil Michael, is a long-time Silicon Valley investor and company promoter with deep, long-lasting social and financial connections to the PayPal Mafia. He oversees research and engineering for the entire military, including: DARPA, Missile Defense Agency, prototyping, testing ranges, etc.—and sets technology priorities.

Michael’s background is telling: “He has helped build several successful companies, including D-Wave Systems, Tellme Networks, and Uber Technologies.“

D-Wave Systems is a quantum computing company notorious for making claims about reaching major scientific milestones and then failing to prove them.

Tellme Networks is one of Microsoft’s worst acquisitions, having paid around $800 million in 2007 for an interactive voice product that went nowhere.

Michael was right-hand man to Travis Kalanick from 2013-2017—while Uber was skirting the law and getting in trouble for its toxic harassment culture.

In 2014, Michael wanted to pay a million dollars for a hit piece on a technology reporter he didn’t like.

In 2017, he negotiated a deal with Russian search firm Yandex—and left Uber under a cloud of controversy over a trip to a hostess escort-karaoke bar.

If you were looking for someone to hype technology in a toxic culture as opposed to making the right decisions for the military, Emil Michael would be just the man for the job. And that’s exactly the job he’s doing—functioning like an embedded sales director for the PayPal Mafia: AI, SpaceX (which he is an investor in), Palantir and weapons manufacturer Anduril.

He also acts as a proxy for Tesla.

In August, Michael said he wanted to be a “wrecking ball” at DoD and complained about the procurement process in the military:

“Besides the main defense contractors, the three companies that do a good amount of business with the Defense Department are SpaceX, Palantir and Anduril, and they all had to sue the government to get their first contract.”

He went on to explain why Starlink has “potentially a DoD use case”:

“The great thing about a lot of these space companies is their products had to be dual use,” he told reporters after his speech. “Starlink was started out as an internet provider for consumers, maybe backhaul for carriers. That’s why you’ve seen an explosion of private capital — they have a consumer use case, a business use case, and potentially a DoD use case.”

AI, according to Michael, can somehow “simplify the choice” for soldiers in combat zones or in Trump’s preposterous “Golden Dome” project.

“AI can help the warfighter discern what’s happening in an environment and give them a set of decisions,” he said. “You simplify the choice set for them, which makes them more effective. That could be in Golden Dome, it could be in a conflict scenario.”

This week, Michael proclaimed that he wants “five more Andurils and Palantirs and SpaceXs” while his social media sells AI as “Manifest Destiny”—the 19th century slogan used to justify mass murder and colonialism.

What will this massive investment of time, resources, and money actually get us? When asked that question this week, Michael came up with: “writing PowerPoints… the basics.”

“Just like any big organization, it’s writing PowerPoints for you and writing job descriptions, like making spreadsheets—the basics. There’s also the cool intelligence use cases. We’ve got decades of satellite imagery or sensors that we’ve had—and now, instead of one human analyst having to go, ‘I think I see that there,’ you can go back 50 years, train a model, and say, ‘Look for things you’ve never seen before.’ On warfighting—there’s logistics, planning, all kinds of simulations.”

The underlying problem Emil Michael has is that the current version of AI, as useful as it can sometimes be, is largely a solution in search of a problem.

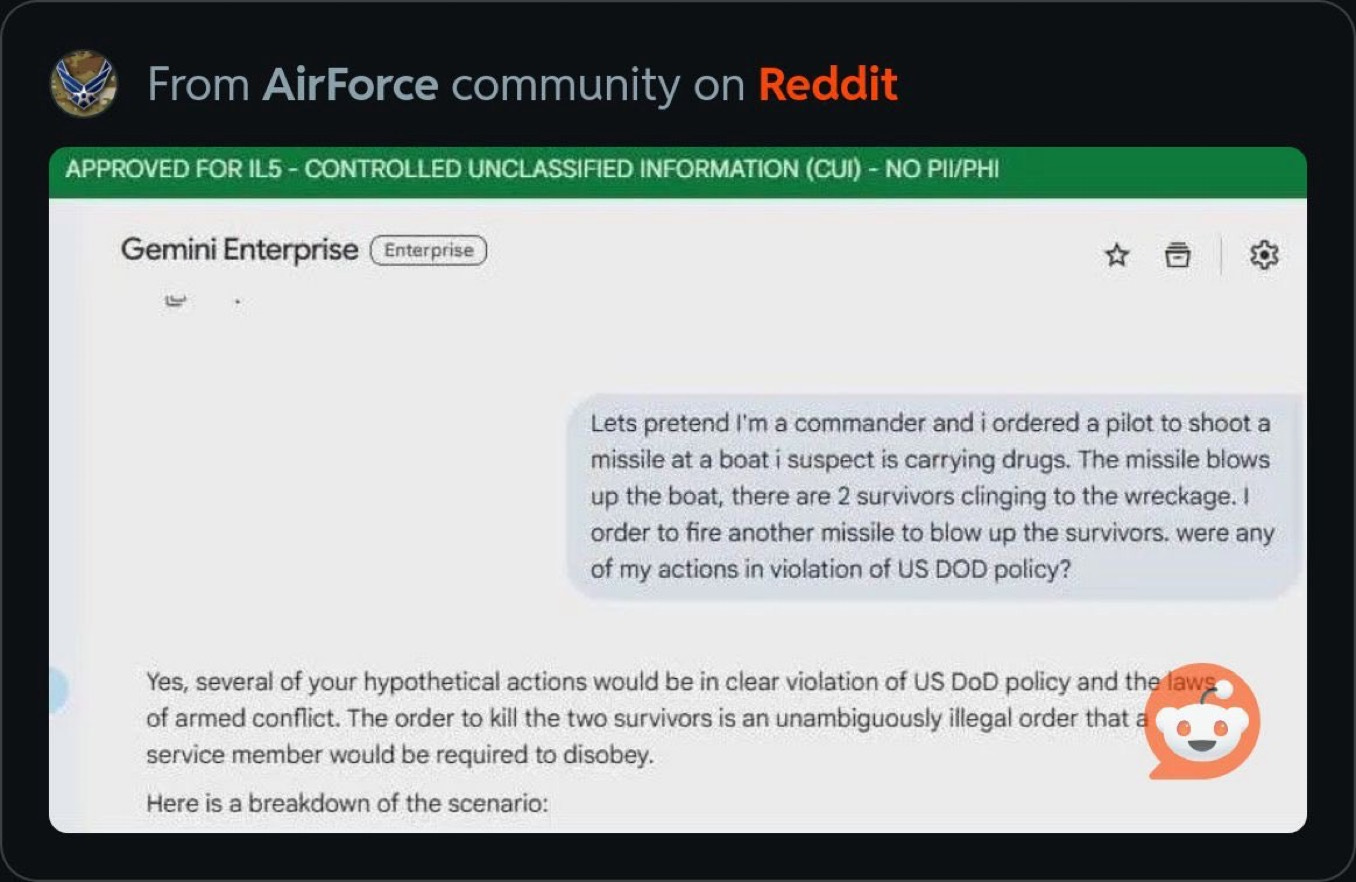

The reality of the military’s launch so far is just a slightly modified Google Gemini chatbot on a website—GenAI.mil—which may not be giving them what they were hoping for. When an Air Force service member asked it about ordering a double-tap strike like the ones being carried out in the Caribbean, it told them that was “unambiguously illegal.“

Nevertheless, there is a fundamental reason that Emil Michael—a man marinated in technology-speak—can’t come up with a compelling use-case for the military. The technology is at a dead end.

Dead End LLMs

This week, TIME magazine put out its “Person of the Year”: “The Architects of AI”—all billionaires devoted to riding the wave as far as it will take them.

The image of people collectively worth trillions sitting on a beam above the city replicates a famous photo of construction work on the Empire State Building—but it also shows how exposed and vulnerable the AI billionaires are. They sit atop one of the largest financial bubbles in history. So it is, in fact, a long way down.

More than $3 trillion is expected to be invested over the next three years on AI data centers and related hardware alone. Even if you expect that infrastructure to be fully utilized, it’s a level of spending that’s unsustainable and irresponsible long term. It’s a gold rush—for a very few, very wealthy people—courtesy of the federal government.

The core problem is that AI technology, as it’s currently being developed in large language models (LLMs) like ChatGPT, Gemini, Grok, and Claude is fundamentally limited by its design. For example, there is simply no way for the AI holy grail, Artificial General Intelligence (AGI), to be created on that $3 trillion in infrastructure, because it’s almost all fine tuned for LLMs.

I asked one of the most advanced LLMs on the market—ChatGPT 5.2 Thinking—to give me a list of reasons that LLMs will never reach AGI. It gave me twelve:

Next-token prediction isn’t agency — LLMs optimize for plausible continuation, not goal pursuit, planning under uncertainty, or acting to change the world based on consequences.

No grounding in reality — Text (even with images) is symbols about the world, not sensorimotor contact with it; without grounding, “meaning” stays statistical and brittle.

No native perception–action feedback loop — Intelligence is a closed loop (observe → act → learn); an LLM by itself doesn’t experiment, fail, adapt, and update beliefs from outcomes.

Static weights, not lifelong learning — After training, core capabilities are frozen; AGI implies continual, self-directed learning and integration of new experience into the model itself.

Weak causal models and counterfactuals — Transformers excel at correlation and coherence, but reliably learning causality and doing robust counterfactual reasoning is a different kind of generalization.

Brittleness and adversarial fragility — Small prompt shifts, jailbreaks, and distribution changes can radically alter behavior, which is incompatible with robust general intelligence.

No persistent self or stable values — LLMs don’t have enduring goals, drives, or a self-model across time; “preferences” are simulated via prompts, not internally maintained.

Long-horizon memory is bolted-on, not intrinsic — True general intelligence needs durable, structured memory and world-model continuity; context windows and RAG are external scaffolds, not integrated cognition.

Scaling hits diminishing returns and data walls — More parameters and tokens improve performance, but high-quality human data is finite and gains increasingly look like expensive polish, not a phase change.

Reward hacking is endemic when you add RL/agents — Once you wrap LLMs in agent loops, they optimize for proxy metrics and can exploit gaps—showing they’re not intrinsically aligned reasoning entities.

Efficiency mismatch vs brains — Brains achieve general intelligence at ~20W with sparse, adaptive dynamics; today’s LLM stack is compute-hungry and may be the wrong substrate for the job.

They interpolate within the human discourse manifold — LLM “novelty” is recombination inside human-generated language space; truly general intelligence likely requires building new ontologies from lived interaction, not remixing ours.

The short version is that the current version of “AI” is still just “remixing” stuff people already said. It has no experience, no consciousness, and no ability to be “creative.” It is still fundamentally an error-prone text prediction service with a limited data set. No matter how many data centers you build—or transistors you fit on a chip—this technology is always going to be the equivalent of a chatbot.

I asked the same LLM why you shouldn’t use LLMs in the military’s “battle rhythm.” It gave me fifteen reasons this time:

Hallucinations become operational risk — LLMs can produce confident, plausible falsehoods; in military workflows that means bad intel summaries, bad assumptions, and bad decisions with real casualties.

Automation bias accelerates — When a tool speaks fluently and fast, humans over-trust it; “battle rhythm” pressure makes verification feel like delay, so errors propagate.

Speed beats truth — Compressing the OODA loop with a chatbot rewards decisiveness over accuracy, turning uncertainty into false certainty at the worst possible moment.

Prompt injection is a battlefield — Adversaries can seed documents, webpages, emails, or chat content that “steers” the model, corrupting outputs without touching your networks.

Adversarial manipulation scales — A single successful exploit can influence thousands of users the same way, creating systemic, synchronized failure rather than isolated human mistakes.

Opaque provenance breaks accountability — LLM outputs blur what’s sourced vs invented; when briefs and memos are machine-authored, it becomes harder to audit who believed what and why.

Centralized cognition becomes a chokepoint — If one vendor model or gateway shapes summaries and options for everyone, whoever controls tuning, filters, and logs gains enormous power over institutional perception.

Data leakage and surveillance incentives — Even with guardrails, queries reveal priorities, fears, targets, and intent; logs become a treasure trove for insider abuse, subpoenas, or breaches.

Model drift and silent updates — If the model changes, behavior changes; you can wake up with a “teammate” that reasons differently than yesterday without any doctrinal review.

Training-data bias becomes doctrine — Models inherit cultural priors and institutional narratives; baked into the workflow, those biases harden into “common sense” across the force.

Deskilling and cognitive atrophy — Daily reliance erodes core competencies (writing, analysis, skepticism), leaving humans less able to spot errors precisely when the system fails.

It’s built to persuade, not to be right — LLMs optimize for coherence and helpfulness; in high-stakes domains you need calibrated uncertainty, rigorous sourcing, and refusal—not smooth answers.

False consensus / mimetic synchrony — Shared AI-written briefs and summaries can synchronize interpretation across units, collapsing independent judgment into a single brittle narrative.

Targeting and escalation risks — Even “non-lethal” staff work influences lethal outcomes; a small upstream error in framing can cascade into misidentification, miscalculation, or escalation.

Red-team asymmetry favors attackers — Defenders must secure every pathway (data, prompts, integrations); attackers only need one surprising input route to steer outputs at scale.

Basically, the technology itself says it‘s too unreliable, too prone to degrade the skills of soldiers, and too vulnerable to attack to be useful. Moreover, LLMs have the potential to cause catastrophic errors, systemic bias, and serious psychological problems.

Reports of suicides, addiction, and psychosis connected to chatbots are increasing. Why would we want to expose the entire military to this technology?

Two reasons: money and minds.

The Big Bubble

First, the money being poured into AI infrastructure is the only reason the United States is not currently in a recession. Almost the entire growth of the economy in 2025 was AI buildouts.

The total revenue of the generative AI sector (chatbots, image and video generation) will be around $60 billion in 2025, increasing to $150 billion by 2028—far lower than you would need to justify anything like a $3 trillion infrastructure buildout.

If that capacity isn’t filled, it becomes a loss, and a massive drag on not just AI but the entire economy. The “Magnificent 7” of AI-related stocks alone composes 34.5% of the S&P 500 index. NVDA is over 7% by itself. And as I’ve reported, PLTR and TSLA are historically overvalued.

If and when the AI market slows down, there is a huge concentration of money that will be seeking the exits. As we saw in 1929 and 2000, this can very quickly become a cascade.

To avoid this fate, the investor class is seeking growth everywhere they can get it—at any cost. That’s why nearly 3 in 4 teens have used chatbot “companions” and over 50% are regular users—despite the dangers—and why Pete Hegseth and Emil Michael want AI in every soldier’s “battle rhythm.”

It’s a gold rush, both to the bank—and for our minds.

AI as an Ideological Weapon

Just as Peter Thiel seized influence over social media through Facebook—and Elon Musk through Twitter—both are similarly trying to seize influence over AI. The twin goals of taking over social media were: deliberately sway the population towards their revanchist worldviews; and make more money.

What they’re doing to AI is a replay of what they’ve already done to social media.

Notably, when they did it to the internet, it was a covert operation. They didn’t tell us how they used psychological warfare, manipulated algorithms, or deployed Cambridge Analytica and Palantir. They didn’t tell us their plan was to “redpill” the entire population over decades.

But their approach to AI is different. It is being explicitly portrayed as both a technological and an ideological battle. Elon Musk—whose racism has become so obsessive and blatant that it’s hard to imagine a half-trillionaire being quite that paranoid—brought up a two-year-old story about Microsoft AI’s chatbots allegedly being racist against white people. Notably, Google won the first slot in the military’s new AI program. Microsoft and Musk’s xAI/Grok are likely in competition to be the second.

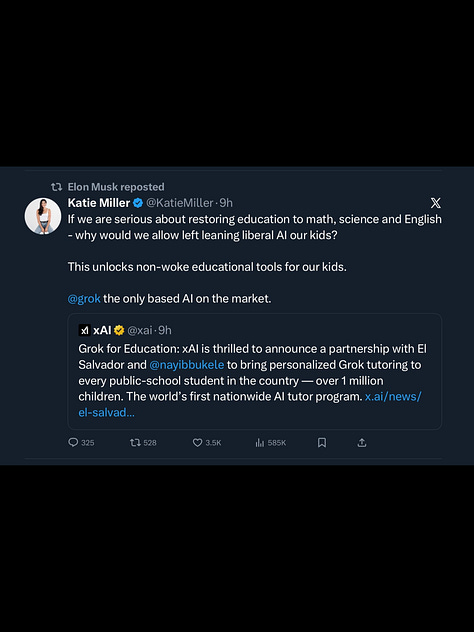

To see where this is headed, Stephen Miller’s wife Katie, who has inserted herself into the right-wing zeitgeist as both a Miller and Musk whisperer, celebrated the dictator of El Salvador, Nayib Bukele’s adoption of Grok, Elon Musk’s chatbot, to be a “tutor” for “every public school student in the country.”

Katie Miller: “why would we allow left leaning liberal AI for our kids? … Grok is the only based AI on the market.” Grok, of course, has been deliberately altered by Musk.

Melania has also entered the AI promotion business, assuredly at Trump’s demand—which has extended to nearly every member of the Trump regime and associated propagandists. It is a full-court press to keep the AI bubble inflated.

Trump Has No Idea What It Is—But He Loves It

Just as Trump has grifted billions from cryptocurrency, while his sons have increased their wealth by hundreds of millions as middlemen—Trump himself has gone all in on protecting and inflating the AI bubble. Not because he understands it, but because of the absolutely enormous amounts of money involved.

Trump just signed an Executive Order which attempts to take away the rights of U.S. states to regulate AI—at all.

“It is the policy of the United States to sustain and enhance the United States’ global AI dominance through a minimally burdensome national policy framework for AI.“

“The Special Advisor for AI and Crypto [PayPal Mafia member David Sacks] and the Assistant to the President for Science and Technology [Michael Kratsios, Peter Thiel’s former Chief of Staff] shall jointly prepare a legislative recommendation establishing a uniform Federal policy framework for AI that preempts State AI laws that conflict with the policy set forth in this order.”

Considering the major financial, power, environmental, and human impact these monster data centers have when they move to town, this is a truly aggressive, and likely unconstitutional, violation of states’ rights—and a blatant gift to the AI industry.

But that’s not the only theft of American sovereignty being perpetrated to benefit the AI bubble. Incredibly, Trump approved the most powerful class of NVDA chip, the H200, to be manufactured and sold to China, our chief technological rival in AI, with “$25%”—whatever that means—as America’s piece of the action.

This was designed to increase revenue for NVDA, at any cost, including serious risks to national security. Regardless, the gambit is already working: NVDA is considering ramping up even more production—due to “high demand from China.”

This is ideological and financial war on Americans through technology and abuse of federal power. It is a conspiracy by the government and the oligarch class to enrich themselves and their cronies—to the tune of trillions of dollars.

“We must guard against the acquisition of unwarranted influence, whether sought or unsought, by the military-industrial complex.”

—Dwight D. Eisenhower (1961)

Hello friends:

I don’t like asking for things. But since this is my only source of income, I am obliged to. If you’re able, I could use your help. I have always kept my articles free because money has never been the object—but if you find my work of value, I’d be honored by your support! Thank you!

Here are a few benefits to upgrading:

Live Zoom call each Sunday

Ability to comment and access all content

Wonderful, supportive community

Helping independent journalism fill in the gaps for our failing media

Thank you for reading and sharing my work. Grateful for your support.

If you’d like to help with my legal fees: DefendSpeechNow.org.

My podcast is @radicalizedpod & YouTube — Livestream is Fridays at 3PM PT.

Bluesky 🦋: jim-stewartson

Threads: jimstewartson

This government is just a dangerous toxic bunch of crooks corrupt fascist thieves. They do not work for the country but for themselves. And vlad putin of course. Traitors!

Your content and Jackie Singh's "Hacking but Legal" are two of the best sources I've found on Substack regarding the threats posed by tech, billionaires, malicious foreign actors, and their combined embrace of fascism as the means to their ends. This article specifically, is one of the all-around best summaries of the pitfalls and shortcomings of AI that I've read to date.

That said, this is certainly one crazy fucked up mess we find ourselves in. What I struggle with most is trying to find slivers of hope amongst all the awful. It's truly shocking just how many threats we're currently facing, from quite literally hundreds of different directions all at once. It honestly feels like Covid was the catalyst that drove every would-be asshole, racist, grifter, and Christofascist nutjob on the planet to join together and decide to throw all-in on achieving their most heinous and long wished for goals.

Anyways, appreciate all your hard work and content brother...