Chatbot Doomerism: Eschatology of the AI Bubble

The AI industry is using the tactics of psychological warfare to prop up its failures before a wave of IPOs.

On the website formerly known as Twitter, this post has gone mega-viral, reaching 80 million views as of this writing.

Numerous people on all sides of the political spectrum have credulously shared it as some kind of prophecy without noticing the author is an AI startup investor.

The post itself is obviously written by AI, as the author later admitted, in a spooky doomer style to funnel people to subscribe to his account. This is typical chatbot slop—and total nonsense:

“It had something that felt, for the first time, like judgment. Like taste.”

Come on.

All of it is appealing to emotion. It’s just marketing. There is no science, or technical rationale. It’s just how it feels, bro.

As someone who has both worked with AI for decades and built immersive entertainment projects for properties like Halo and The Dark Knight, the post is just world-building using elementary storytelling tactics. The author asked a chatbot to tell a story about chatbots, and it did in its typical fashion—cliches, unfounded assertions, and appeals to emotion. Nevertheless, numerous well-meaning people have cited it as a harbinger of “something big.”

The “something big” is really several initial public offerings (IPOs) scheduled this year by AI companies: Anthropic (Opus, Claude), OpenAI (ChatGPT), and SpaceX which Elon Musk had acquire his company xAI (Grok) for a ridiculous $250 billion—a transaction purely designed to protect the investors in his failing company.

Collectively, these IPOs are expected to be valued at over $2 trillion by the end of 2026. That’s a lot of money, and the investors need the institutions and retail public to believe it makes sense.

Spoiler alert: It does not make sense.

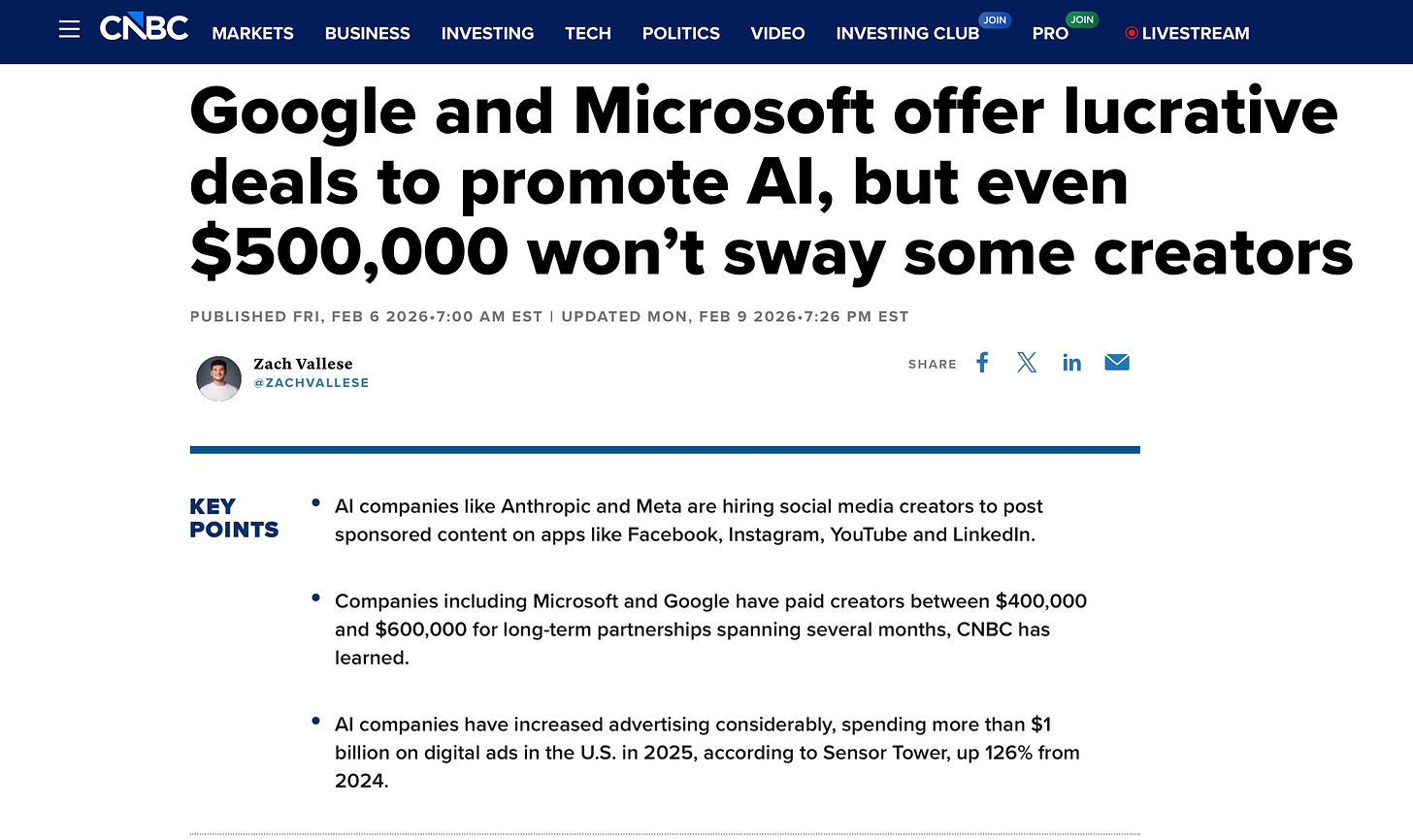

Many of the people you may see online posting effusively about “something big” are being paid very well for it. Microsoft and Google are reportedly paying $400K-600K to influencers to tell you how amazing the chatbot future is going to be. Or how scary, depending on the marketing campaign.

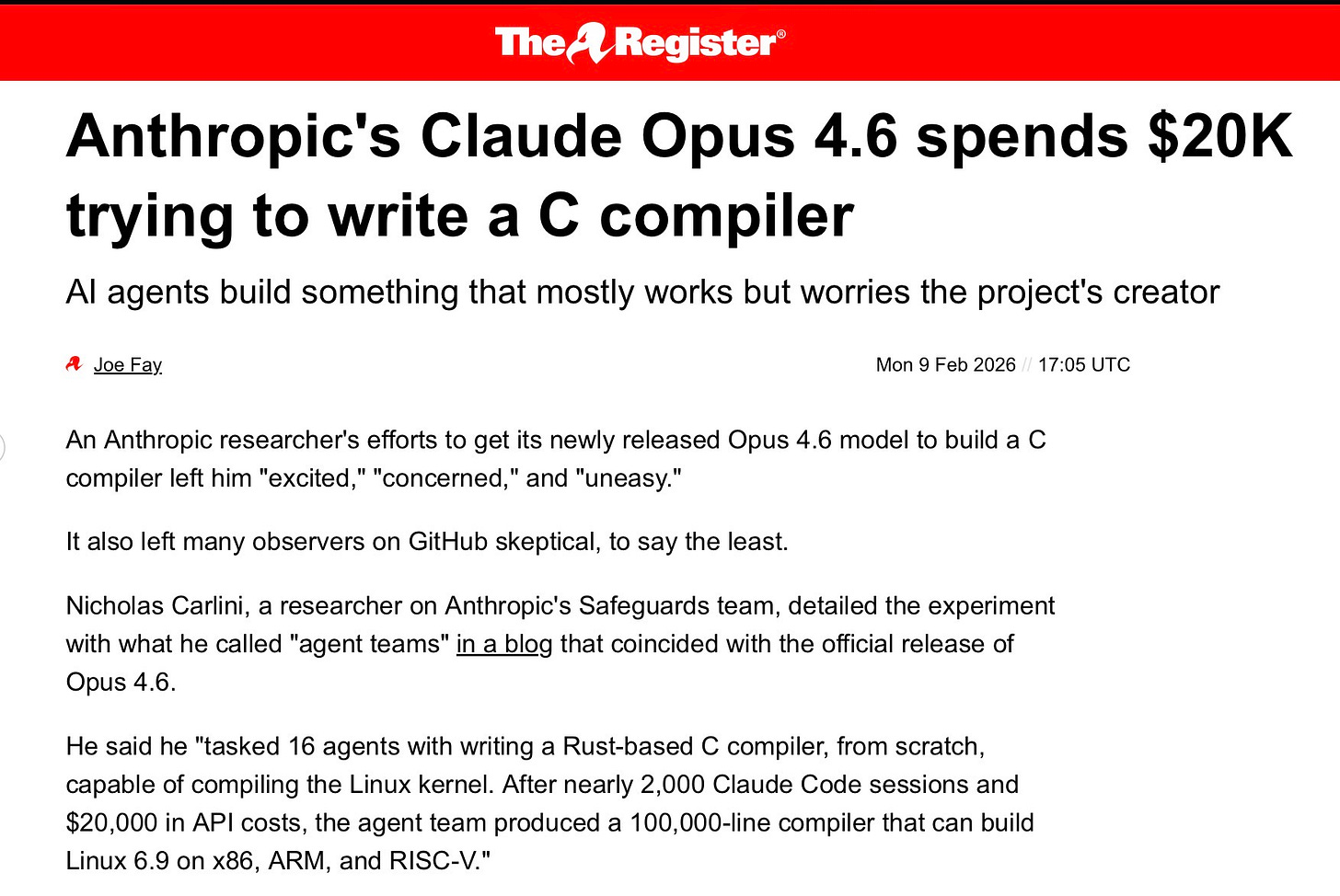

As another example of chatbot hype gone wild, Anthropic, which is scheduled to go public this year at a valuation of more than $380 billion, made a huge deal out of an experiment where it tried to build one of the most documented pieces of software in the world, a C-compiler. They used 16 chatbots running for two weeks, at a cost of $20,000 in energy alone, and got a rickety pile of junk that kind of works, sometimes.

Nevertheless the Anthropic engineer claims to be “excited,” “concerned,” and “uneasy.”

But the details of the results are important. The code is not just buggy—it could compile Linux but not “hello world”—it is bloated, inefficient and brittle. It’s not actually useful at all for production code, even as a start. It is, in reality, just a hallucination of a C-compiler, smashed together from public codebases and brute electrical force.

This is typical of these companies. Every time the AI industry finds a problem they can solve badly, they try to fix the problems by trying to solve something more complicated instead of making something that actually works. But every time, lo and behold, they still just make a worse version of the more complicated thing. It’s blind, suicidal accelerationism.

The big “progress” you hear from these companies about “agents” is just putting a chatbot in a loop and throwing more and more energy at trying to get a chatbot to do something it will never be able to do—think.

What we’re seeing increasingly in AI is what we’ve already seen in cryptocurrency—not a movement to find a useful technology, but a cult devoted to finding God in the metaverse. The alternating doomerism and ecstatic performances of AI influencers promote something more akin to religious apocalyptic eschatology than sober takes on technology.

Moreover, the technology itself feeds on people’s psychological vulnerabilities, amplifying the kind of revelatory illusion that adds to a paid doomerist’s arsenal.

All of it is about keeping the biggest bubble in American history afloat, a bubble currently making Elon Musk a paper near-trillionaire, and making the most toxic company on the planet, Palantir, also the most overvalued stock in market history.

But what’s happening beneath is the largest wealth transfer, ever—$5,000,000,000,000 (trillion) projected to be “invested” in “AI infrastructure” in the next 5 years—much of which will benefit the worst people in the world.

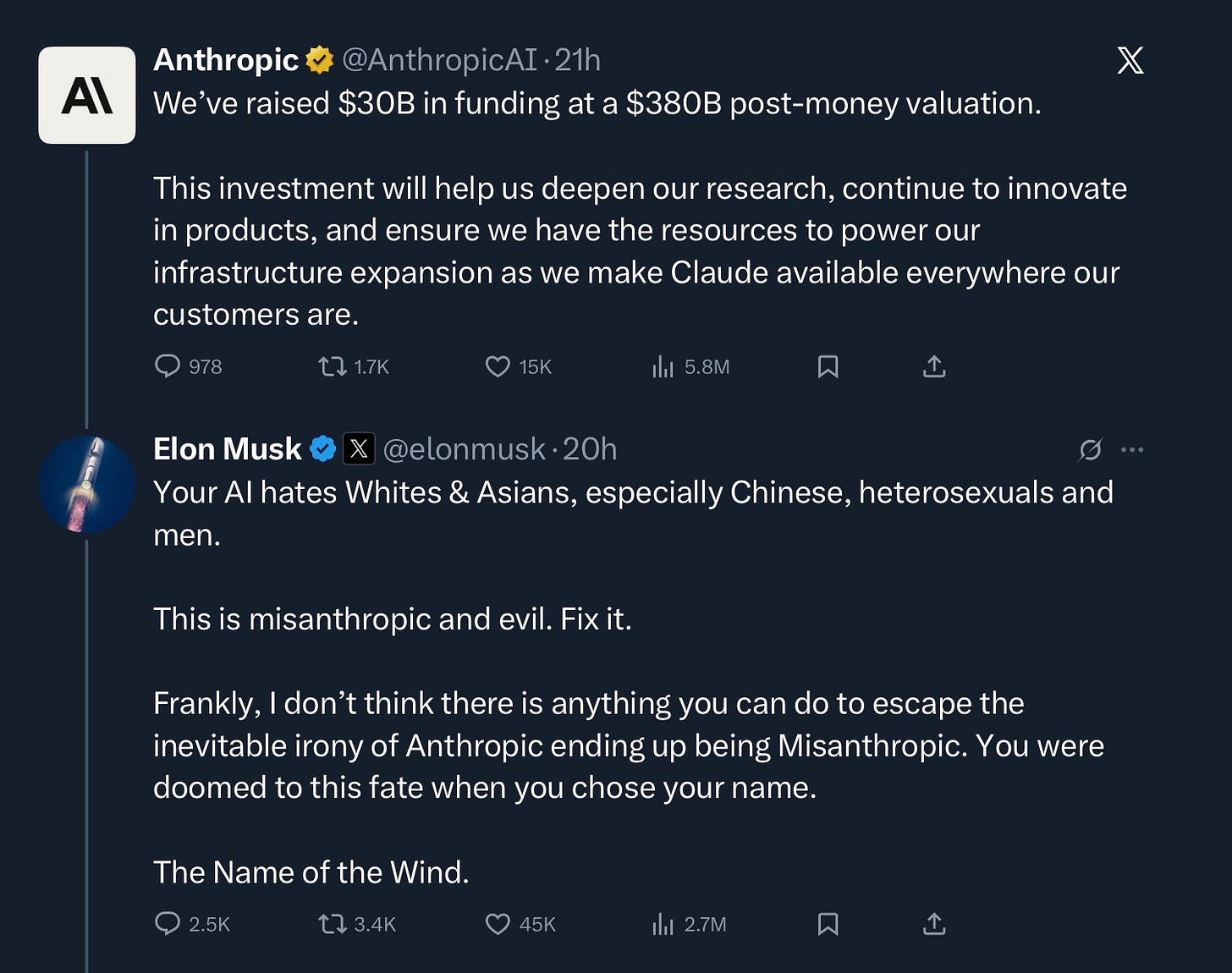

For example, Elon Musk, posted the below, which is doing several deliberately deceptive and dangerous things, in addition to being wildly racist:

He treats a chatbot as something with an ideology, and a goal—as something that can itself be “evil.” This feeds the idea that LLMs are somehow conscious, or have intent. This is quasi-religious nonsense designed to make a software feature seem like a conscious being.

He frames his competition as a combatant in an information race war against “Whites & Asians, especially Chinese”—an allowance used to deflect his pathological white supremacy—to both smear Anthropic, and try to divide and conquer their audience through sheer racism.

He promotes eugenicist, Dark Enlightenment propaganda under the cover of industry competition.

There are real dangers, and real opportunities with AI. Neural networks, which is all chatbots really are underneath, have been useful for decades. I personally find ChatGPT to be good as a targeted search tool.

It’s not that the technology is useless or that there aren’t real concerns about it; it’s just that it’s not going to take everyone’s jobs, or end the world. Unless, that is, we do something utterly insane like pump $5 trillion into it with absolutely no way to get it back.

The eschatology of the AI industry is a circular spiral. It insists that AI will improve so much if we give them trillions of dollars there will be a good return on investment, but a huge number of people will lose their jobs and it will create a massive disruption in the economy. Also, it might go out of control and kill everybody. This is, according to this train of thought, why we have to accelerate faster into the brick wall.

It’s like the train station in Harry Potter, Platform nine-and-three-quarters, where you just have to walk through a wall to the magical world. Trust me, bro.

Just don’t try this as a Muggle.

I urge everyone involved in this collective psychosis to calm down. Stop feeding into this dangerous hype-bubble. Stop inflating this thing that you know won’t end well.

To everyone else, the best thing we can all do is just stop giving these charlatans money—until they, if ever, stop driving us into a wall.

If you haven’t upgraded to a paid subscription, please consider it! I never hide important information behind a paywall, so I rely on my readers for support. Thank you for reading and sharing!

If you’d like to help me with expenses, here is my DonorBox. 💙

Here are a few benefits to upgrading:

Live Zoom call each Sunday

Ability to comment and access all content

Wonderful, supportive community

Helping independent journalism fill in the gaps for our failing media

If you’d like to help with my legal fees: DefendSpeechNow.org.

My podcast is @radicalizedpod & YouTube — Livestream is Fridays at 3PM PT.

Bluesky 🦋: jim-stewartson

Threads: jimstewartson

"What we’re seeing increasingly in AI is what we’ve already seen in cryptocurrency—not a movement to find a useful technology, but a cult devoted to finding God in the metaverse."

That's as good a place as any to find God. In fact, the only place. Beware all evangelists.

Wondering if AI can be corrupted just through searches. If enough searches are created with specific wording, would it confuse AI and cause AI to assemble nonsense answers